Australia's CRN reports that former Australian Privacy Commissioner Malcolm Crompton has called for the establishment of a formal privacy industry to rethink identity management in an increasingly digital world:

Addressing the Cards & Payments Australasia conference in Sydney this week, Crompton said the online environment needed to become “safe to play” from citizens’ perspective.

While the internet was built as a “trusted environment”, Crompton said governments and businesses had emerged as “digital gods” with imbalanced identification requirements.

“Power allocation is where we got it wrong,” he said, warning that organisations’ unwarranted emphasis on identification had created money-making opportunities for criminals.

Malcolm puts this well. I too have come to see that the imbalance of power between individual users and Internet business is one of the key factors blocking the emergence of a safe Internet.

CRN continues:

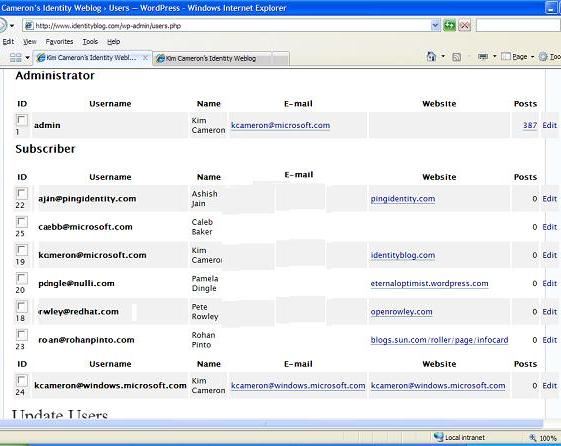

Currently, users were forced to provide personal information to various email providers, social networking sites, and online retailers in what Crompton described as “a patchwork of identity one-offs”.

Not only were login systems “incredibly clumsy and easy to compromise”; centralised stores of personal details and metadata created honeypots of information for identity thieves, he said…

Refuting arguments that metadata – such as login records and search strings – was unidentifiable, Crompton warned that organisations hording such information would one day face a user revolt…

He also recommended the use of cloud-based identification management systems such as Azigo, Avoco and OpenID, which tended to give users more control of their information and third-party access rights.

User-centricity was central to Microsoft chief identity architect Kim Cameron’s ‘Laws of Identity’ (pdf), as well as Canadian Privacy Commissioner Ann Cavoukian’s seven principles of ‘Privacy by Design’ (pdf).

Full article here.

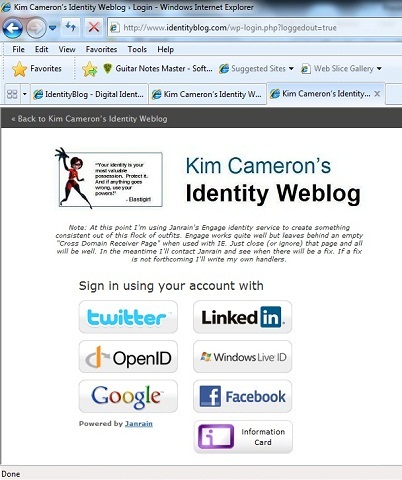

“NASCAR” approach of presenting a bunch of different buttons that redirect the user to, uh, something-or-other-that-can-be-phished, ahem, in spite of the privacy and security problems. This part of the conversation will go on for some time, since these problems will become progressively more widespread as NASCAR gains popularity and the criminally inclined tune in to its potential as a gold mine… But that discussion is for another day.

“NASCAR” approach of presenting a bunch of different buttons that redirect the user to, uh, something-or-other-that-can-be-phished, ahem, in spite of the privacy and security problems. This part of the conversation will go on for some time, since these problems will become progressively more widespread as NASCAR gains popularity and the criminally inclined tune in to its potential as a gold mine… But that discussion is for another day.

Last summer I

Last summer I