Last week Microsoft announced the availability of Version 2 of the U-Prove Technology Preview.

What’s new about it?

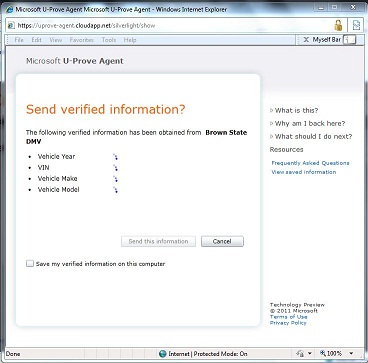

The most important thing is that it offers a new, web-oriented user experience carefully tailored to helping people control the release of “verified claims” while protecting their privacy. By verified claims I mean things that are said about them as flesh-and-blood people by entities that can speak, at least in certain contexts, with authority. By protecting privacy I mean keeping information released to the minimum necessary, and ensuring that the authority making the claims – for example a government – is not able to track and profile the way your information is used.

The system takes a number of the good ideas from CardSpace but is also informed by what CardSpace didn’t do well. It doesn’t require the installation of new components on your computer. It works on all the major browsers and phones. It roams between devices. Sites don't have to worry about users “getting a card” before the system will work. And it allows claims providers and relying parties to shape and brand their users’ experiences while still providing a consistent interface for claims approval.

The system takes a number of the good ideas from CardSpace but is also informed by what CardSpace didn’t do well. It doesn’t require the installation of new components on your computer. It works on all the major browsers and phones. It roams between devices. Sites don't have to worry about users “getting a card” before the system will work. And it allows claims providers and relying parties to shape and brand their users’ experiences while still providing a consistent interface for claims approval.

In other words, it represents a big step forward for protecting privacy using high value credentials to release claims.

A focused approach

When it comes to verified claims, the “U-Prove Agent” goes beyond CardSpace. One way it does this is by being highly focused and integrated into a specific type of identity experience. I’ll be posting a video soon that will help you get a concrete sense of why this works.

That focus represents a change from what we tried to do with CardSpace. One of the key goals of CardSpace was to provide a “generalized solution” – an alternative to the “patchwork quilt” of what I called “identity kludges” that characterize peoples’ experience of identity on the Internet.

In fact I still believe as much as ever that a “generalized solution” would be nice to have. I would even go so far as to say that a generalized solution is inevitable – at some point in time.

But the current chaos is so vast – and peoples’ thinking about it so fractured – that the only prudent practical approach is to carve the problem into smaller pieces. If we can make progress in some of the pieces we can tie that progress together. The U-Prove Agent for exchange of verified claims is a good example of this, making it possible to offer services that would otherwise be impossible because of privacy problems.

What about CardSpace?

Because of its focus, the U-Prove agent isn’t capable of doing everything that CardSpace attempted to do using Information Cards.

It doesn’t address the problem of helping users manage ALL their identities while keeping them separate. It doesn’t address the user problems of password fatigue, phishing and pervasive “secret questions” when logging into consumer web sites. It doesn’t solve the famous “home realm discovery problem” when using federation. And perhaps most frustrating when it comes to using devices like phones, it doesn’t give the user a simple way to pick their identities from a set of visual representations (icons or cards).

These issues are all more pressing today than they were in 2006 when CardSpace was first proposed. Yet one thing is clear: in five years of intensive work and great cross-industry collaboration with other innovators working on Apple and Linux computers and phones, we weren’t able to get Information Cards onto the radar of the big web properties users depend on.

Those properties had other priorities. My friend Mike Jones put it well at Self-Issued:

“In my extensive experience talking with potential adopters, while many/most thought that CardSpace was a good idea, because they didn’t see it solving a top-5 pain point that they were facing at that moment or providing immediate compelling value, they never actually allocated resources to do the adoption at their site.”

Regardless of why this was the case, it explains why last week Microsoft also announced that it will not be shipping CardSpace 2.0.

In my personal view, we all certainly need to keep working on the problems Information Cards address, and many of the concepts and technologies used in Information Cards should be retained and evolved. I think the U-Prove team has done a good job at that, and provides an example of how we can move forward to solve specific problems. Now the question is how to do so with the other aspects of user-centric identity.

Over the next while I’m going to do a series of posts that explore some of these issues further – drawing some lessons from what we’ve learned over the last few years. Most of all, it is important to remember what great progress we’ve made as an industry around the Identity Metasystem, federation technology, and claims-based computing. The CardSpace identity selector dealt with the hardest and most forward-looking problems of the Metasystem: the privacy, security and usability problems that will emerge as federated identity becomes a key component of the Internet. It also challenged industry with an approach that was truly user centric.

It's no surprise that it is hardest to get consensus on forward-looking technologies! But meanwhile, the very success of the Identity Metasystem as a whole will cause all the issues we’ve been working on with Information Cards to return larger than life.

Now that the world is so thoroughly post-modern, how often do you come across information that qualifies as unexpected? Well, I have to say that the following story , appearing in the

Now that the world is so thoroughly post-modern, how often do you come across information that qualifies as unexpected? Well, I have to say that the following story , appearing in the  “Logging into the DFAS myPay site is frustrating. This is the gateway where DoD employees can view and change their financial data and records.

“Logging into the DFAS myPay site is frustrating. This is the gateway where DoD employees can view and change their financial data and records.