First of all, I have to refer readers to the Office of Inadequate Security, apparently operated by databreaches.net. I suggest heading over there pretty quickly too – the office is undoubtedly going to be so busy you'll have to line up as time goes on.

First of all, I have to refer readers to the Office of Inadequate Security, apparently operated by databreaches.net. I suggest heading over there pretty quickly too – the office is undoubtedly going to be so busy you'll have to line up as time goes on.

So far it looks like the go-to place for info on breaches – it even has a twitter feed for breach junkies.

Recently the Office published an account that raises a lot of questions:

I just read a breach disclosure to the New Hampshire Attorney General’s Office with accompanying notification letters to those affected that impressed me favorably. But first, to the breach itself:

StudentCity.com, a site that allows students to book trips for school vacation breaks, suffered a breach in their system that they learned about on June 9 after they started getting reports of credit card fraud from customers. An FAQ about the breach, posted on www.myidexperts.com explains:

StudentCity first became concerned there could be an issue on June 9, 2011, when we received reports of customers travelling together who had reported issues with their credit and debit cards. Because this seemed to be with 2011 groups, we initially thought it was a hotel or vendor used in conjunction with 2011 tours. We then became aware of an account that was 2012 passengers on the same day who were all impacted. This is when we became highly concerned. Although our processing company could find no issue, we immediately notified customers about the incident via email, contacted federal authorities and immediately began a forensic investigation.

According to the report to New Hampshire, where 266 residents were affected, the compromised data included students’ credit card numbers, passport numbers, and names. The FAQ, however, indicates that dates of birth were also involved.

Frustratingly for StudentCity, the credit card data had been encrypted but their investigation revealed that the encryption had broken in some cases. In the FAQ, they explain:

The credit card information was encrypted, but the encryption appears to have been decoded by the hackers. It appears they were able to write a script to decode some information for some customers and most or all for others.

The letter to the NH AG’s office, written by their lawyers on July 1, is wonderfully plain and clear in terms of what happened and what steps StudentCity promptly took to address the breach and prevent future breaches, but it was the tailored letters sent to those affected on July 8 that really impressed me for their plain language, recognition of concerns, active encouragement of the recipients to take immediate steps to protect themselves, and for the utterly human tone of the correspondence.

Kudos to StudentCity.com and their law firm, Nelson Mullins Riley & Scarborough, LLP, for providing an exemplar of a good notification.

It would be great if StudentCity would bring in some security experts to audit the way encryption was done, and report on what went wrong. I don't say this to be punitive, I agree that StudentCity deserves credit for at least attempting to employ encryption. But the outcome points to the fact that we need programming frameworks that make it easy to get truly robust encryption and key protection – and to deploy it in a minimal disclosure architecture that keeps secrets off-line. If StudentCity goes the extra mile in helping others learn from their unfortunate experience, I'll certainly be a supporter.

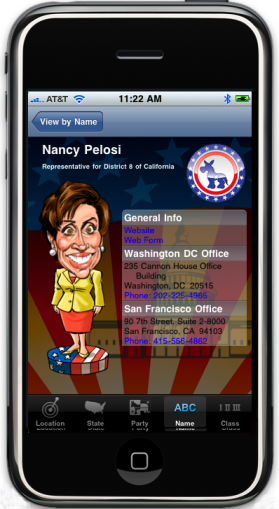

This way you can get very valuable and otherwise un-accessible information about a person of your interest very easily.

This way you can get very valuable and otherwise un-accessible information about a person of your interest very easily.