If you have kept up with the excellent Wall Street Journal series on smartphone apps that inappropriately collect and release location information, you won't be surprised at their latest chapter: Federal Prosecutors are now investigating information-sharing practices of mobile applications, and a Grand Jury is already issuing subpoenas. The Journal says, in part:

Federal prosecutors in New Jersey are investigating whether numerous smartphone applications illegally obtained or transmitted information about their users without proper disclosures, according to a person familiar with the matter…

The criminal investigation is examining whether the app makers fully described to users the types of data they collected and why they needed the information—such as a user's location or a unique identifier for the phone—the person familiar with the matter said. Collecting information about a user without proper notice or authorization could violate a federal computer-fraud law…

Online music service Pandora Media Inc. said Monday it received a subpoena related to a federal grand-jury investigation of information-sharing practices by smartphone applications…

In December 2010, Scott Thurm wrote Your Apps Are Watching You, which has now been “liked” by over 13,000 people. It reported that the Journal had tested 101 apps and found that:

… 56 transmitted the phone's unique device identifier to other companies without users’ awareness or consent. Forty-seven apps transmitted the phone's location in some way. Five sent a user's age, gender and other personal details to outsiders. At the time they were tested, 45 apps didn't provide privacy policies on their websites or inside the apps.

In Pandora's case, both the Android and iPhone versions of its app transmitted information about a user's age, gender, and location, as well as unique identifiers for the phone, to various advertising networks. Pandora gathers the age and gender information when a user registers for the service.

Legal experts said the probe is significant because it involves potentially criminal charges that could be applicable to numerous companies. Federal criminal probes of companies for online privacy violations are rare…

The probe centers on whether app makers violated the Computer Fraud and Abuse Act, said the person familiar with the matter. That law, crafted to help prosecute hackers, covers information stored on computers. It could be used to argue that app makers “hacked” into users’ cellphones.

[More here]

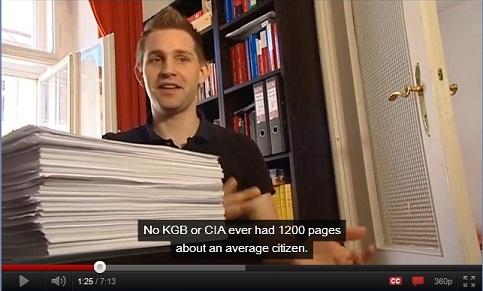

The elephant in the room is Apple's own approach to location information, which should certainly be subject to investigation as well. The user is never presented with a dialog in which Apple's use of location information is explained and permission is obtained. Instead, the user's agreement is gained surreptitiously, hidden away on page 37 of a 45 page policy that Apple users must accept in order to use… iTunes. Why iTunes requires location information is never explained. The policy simply states that the user's device identifier and location are non-personal information and that Apple “may collect, use, transfer, and disclose non-personal information for any purpose“.

Any purpose?

Is it reasonable that companies like Apple can proclaim that device identifiers and location are non-personal and then do whatever they want with them? Informed opinion seems not to agree with them. The International Working Group on Data Protection in Telecommunications, for example, asserted precisely the opposite as early as 2004. Membership of the Group included “representatives from Data Protection Authorities and other bodies of national public administrations, international organisations and scientists from all over the world.”

More empirically, I demonstrated in Non-Personal information, like where you live that the combination of device identifier and location is in very many cases (including my own) personally identifying. This is especially true in North America where many of us live in single-family dwellings.

[BTW, I have not deeply investigated the approach to sharing of location information taken by other smartphone providers – perhaps others can shed light on this.]

First of all, I have to refer readers to the

First of all, I have to refer readers to the