My friend Cameron Westland, who has worked on some cool applications for the iPhone, wrote me to complain that I linked to iPhone Privacy:

I understand the implications of what you are trying to say, but how is this any different from Mac OS X applications accessing the address book or Windows applications accessing contacts? (I'm not sure about Windows, but I know it's possible on a Mac).

Also, the article touches on storing patient information on an iPhone. I believe Seriot is guilty of a major oversight in simply correlating the fact that spy phone has access to contacts with it also being able to do so in a secured enterprise.

If the iPhone is deployed in the enterprise, the corporate administrators can control exactly which applications get installed. In the situations where patient information is stored on the phone, they should be using their own security review process to verify that all applications installed meet the HIPPA certification requirements. Apple makes no claim that applications meet the stringent needs of certain industries – that's why they give control to administrators to encrypt phones, restrict specific application installs, and do remote wipes.

Also, Seriot did no research behavior of a phone connected to a company's active directory, versus just plain old address book… This is cargo cult science at best, and I'm really surprised you linked to it!

I buy Cameron's point that the controls available to enterprises mitigate a number of the attacks presented by Seriot – and agree this is important. How do these controls work? Corporate administrators can set policies specifying the digital signatures of applications that can be installed. They can use their own processes to decide what applications these will be.

None of this depends on App Store verification, sandboxing, or Apple's control of platform content. In fact it is no different from the universally available ability to use a combination of enterprise policy and digital signature to protect enterprise desktop and server systems. Other features, like the ability for an operator to wipe information, are also pretty much universal.

If the iPhone can be locked down in enterprises, why is Seriot's paper still worth reading? Because many companies and even governments are interested in developing customer applications that run on phones. They can't dictate to customers what applications to install, and so lock-down solutions are of little interest. They turn to Apple's own claims about security, and find statements like this one, taken from the otherwise quite interesting iPhone security overview.

Runtime Protection

Applications on the device are “sandboxed” so they cannot access data stored by other applications. In addition, system files, resources, and the kernel are shielded from the user’s application space. If an application needs to access data from another application, it can only do so using the APIs and services provided by iPhone OS. Code generation is also prevented.

Seriot shows that taking this claim at face value would be risky. As he says in an eWeek interview:

“In late 2009, I was involved in discussions with the Swiss private banking industry regarding the confidentiality of iPhone personal data,” Seriot told eWEEK. “Bankers wanted to know how safe their information [stores] were, which ones are exactly at risk and which ones are not. In brief, I showed that an application downloaded from the App Store to a standard iPhone could technically harvest a significant quantity of personal data … [including] the full name, the e-mail addresses, the phone number, the keyboard cache entries, the Wi-Fi connection logs and the most recent GPS location.”

It is worth noting that Seriot's demonstration is very easy to replicate, and doesn't depend on silly assumptions like convincing the user to disable their security settings and ignore all warnings.

The points made about banking applications apply even more to medical applications. Doctors are effectively customers from the point of view of the information management services they use. Those services won't be able to dictate the applications their customers deploy. I know for sure that my doctor, bless his soul, doesn't have an IT department that sets policies limiting his ability to play games or buy stocks. If he starts using his phone for patient-related activities, he should be aware of the potential issues, and that's what MedPage was talking about.

Neither MedPage, nor CNET, nor eWeek nor Seriot nor I are trying to trash the iPhone – it's just that application isolation is one of the hardest problems of computer science. We are pointing out that the iPhone is a computing device like all the others and subject to the same laws of digital physics, despite dangerous mythology to the contrary. On this point I don't think Cameron Westland and I disagree.

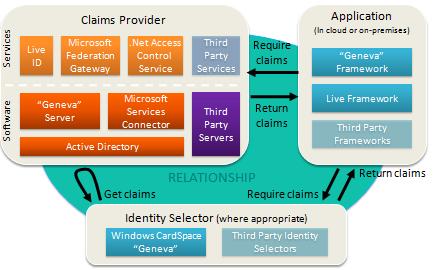

We have another announcement that really drives home the flexibility of claims.

We have another announcement that really drives home the flexibility of claims.