Keith Brown points out that we need some permanent web resources that would teach people about Information Cards, how they work, how to use them, and so on:

As I add support for information cards to Pluralsight, I'm rather surprised that I'm having trouble finding official landing pages for consumers. For example, on our logon page, there will be a button to click to log in using an information card, kind of like what you see on Kim's login page. For people who don't know what an information card is, this might be confusing, so of course we'll want a link that points to some documentation. But right now it seems as though everyone is creating their own descriptions for this. Here's Kim's what is an information card page, for example.

It seems as though it would help adoption if there were some centralized descriptions of this stuff. Do these pages exist and I'm just missing them? Or is it that Microsoft only wants to talk about CardSpace, which is their implementation of the selector? I note that when Kim wants to tell you how to install an identity selector, he points to a WordPress blog called the Pamela Project, which doesn't seem too helpful, but might be interesting for someone wanting to add support for information cards to their WordPress blog.

It seems to me that if the industry really wants consumers to start adopting information cards, somebody's going to have to explain this stuff in terms my mother can understand, and it would help to have a common place where those explanations live.

In another post, discussing the issue with Richard Turner, he adds:

In my opinion, somebody (Microsoft?) needs to break this holding pattern fast. I agree that things aren't going to take off until there are more relying parties. But as a guy who is busy doing just that (adding support for infocard to pluralsight.com), it doesn't make me feel very comfortable that those consumer landing pages I talked about in my post don't already exist on the web. I happen to be very committed to this technology, so I'm going to implement a relying party no matter what. Other websites might not be so inclined.

I agree this would help – and simplify our lives. When I InfoCard-enabled my site, I had to cobble stuff up from scratch. It was tedious since none of the materials existed. Maybe that's why my help screens are a bit, as Keith is too polite to tell you, crude.

It sure would be neat to have a PERMANENT location everyone's Information Card help links can point to. That would provide consistency, and let us get some really good resources together, including videos.

I'll bounce this idea around with others here at Microsoft and see how we can play, as Keith says, a leadership role in making this happen.

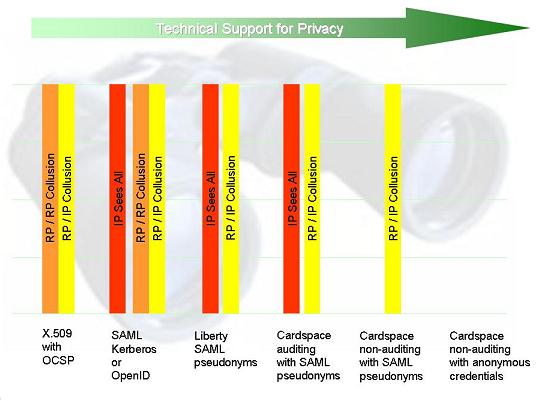

What is good about X.509 is that if a relying party does not collude, the CA has no visibility onto the fact that a given user has visited it (we will see that in some other systems such visibility is unavoidable). But a relying party could at any point decide to collude with the CA (assuming the CA actually accepts such information, which may be a breach of policy). This might result in the transfer of information in either direction beyond that contained in the certificate itself.

What is good about X.509 is that if a relying party does not collude, the CA has no visibility onto the fact that a given user has visited it (we will see that in some other systems such visibility is unavoidable). But a relying party could at any point decide to collude with the CA (assuming the CA actually accepts such information, which may be a breach of policy). This might result in the transfer of information in either direction beyond that contained in the certificate itself.