Still further to my recent piece on delegation, Eric Norman writes to give another example of a user-absent scenario. Again, to me, it is an example of a user delegating rights to a service which should run under its own identity, presenting the delegation token given to it by the user.

For an example of user-absent scenarios, look at grid computing. In this scenario, a researcher is going to launch a long-running batch job into the computing grid. Such a job may run for days and the researcher needs to go home and feed the dog and may be absent if a particular stage in the job requires authentication. The grid folks have invented a “proxy certificate†for this case. While it’s still the case that a user is present when their “main†identity is used, the purpose of the proxy cert is to delegate authentication to an agent in their absence such that if that agent is compromised, all the researcher loses is that temporary credential.

Perhaps this doesn’t count as a “user absent scenarioâ€. Nevertheless, I think it’s certainly relevant to discussions about delegation.

I agree this is relevant. The proxy cert is a kind of practical hybrid that gets to some of what we are trying to do without attempting to fix the underlying infrastructure. It's way better than what we've had before, and a step on the right road. But I think those behind proxy certs will likely agree with me about the theoretical issues under discussion here.

As an aside, it's interesting that their scheme is based on public key, and that's what makes delegation across multiple parties “tractable” even in a less than perfect form. I say public key without at all limiting my point to X.509.

With respect to the problem of having identities on different devices, Eric adds:

Um, I think one of the scenarios Eve might have had in mind is the use of smart cards. A lot of people think that the “proper†way smart cards should operate is that secrets (e.g. private keys) are generated an the card and will reside on that card for their entire life and cannot be copied anywhere else. I’m not commenting on whether that’s really proper or not, but there sure are a lot of folks who think it is, and there are manufactures that are creating smart cards do indeed exhibit that behavior.

If users are doing million dollar bank transfers, I think it makes sense to keep their keys in a self-destroying dongle. In many other cases, it makes sense to let users move them around. After all, right now they spew their passwords and usernames into any dialog box that opens in front of them, so controlled movement of keys from one device to another would be a huge step forward.

In terms of the deeper discussion about devices, I think we also have to be careful to separate between credentials and digital identities. For example, I could have one digital identity, in the sense of a set of claims my employer or my bank makes about me, and I could prove my presence to that party using several different credentials-in-the-strict-sense: a key on smart card when I was at work; a key on a phone while on the road; even, if the sky was falling and there was an emergency, a password and backup questions.

If we don't clearly make this distinction,, we'll end up in a “fist full of dongles” nightmare that will even make Clint Eastwood run for the hills. When I hear people talk about CardSpace as a “credential selector” it makes my hair stand on end: it is an identity selector, and various credentials can be used at different times to prove to the claims issuer that I am some given subject.

Speaking of smart card credentials, one of the big problems in last-generation use of smartcards was that if a trojan was running on your machine, it could use your smartcard and perform signatures without your knowledge. Worst of all, smartcards lend themselves to cross-site scripting attacks (not possible with CardSpace). To me this is yet another call to have the user involved in the process of activating the trusted device.

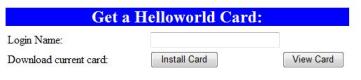

In the case described by Pat, the site really does use a “registration” model like the one from BestBuy shown here.

In the case described by Pat, the site really does use a “registration” model like the one from BestBuy shown here.