I concluded my last piece on linkability and identity technology by explaining that the probability of collusions between Relying Parties (RPs) CAN be greatly reduced by using SAML tokens rather than X.509 certificates. To provide an example of why this is so, I printed out the content of one of the X.509 certificates I use at work, and here's what it contained:

| Version | V3 |

| Serial Number | 13 9b 3c fc 00 03 00 19 c6 e2 |

| Signature Algorithm | sha1RSA |

| Issuer | CN = IDA Enterprise CA 1 |

| Valid From | Friday, February 23, 2007 8:15:27 PM |

| Valid To | Saturday, February 23, 2008 8:15:27 PM |

| Subject | CN = Kim Cameron OU = Users DC = IDA DC = Microsoft DC = com |

| Public Key | 25 15 e3 c4 4e d6 ca 38 fe fb d1 41 9f ee 50 05 dd e0 15 dc d6 2a c3 cc 98 53 7e 9e b4 c7 a5 4c a7 64 56 66 1e 3d 36 4a 11 72 0a eb cf c9 d2 6c 1f 2e b2 2a 67 4f 07 52 70 36 f2 89 ec 98 09 bd 61 39 b1 52 07 48 9d 36 90 9c 7d de 61 61 76 12 5e 19 a5 36 e2 11 ea 14 45 b1 ba 12 e3 e2 d5 67 81 d1 1f bb 04 b1 cc 52 c2 e5 3e df 09 3d 2b a5 |

| Subject Key Identifier | 35 4d 46 4a 13 c1 ae 81 3b b8 b5 f4 86 bb 2a c0 58 d7 ad 92 |

| Enhanced Key Usage | Client Authentication (1.3.6.1.5.5.7.3.2) |

| Subject Alternative Name | Other Name – Principal Name=kc@microsoft.com |

| Thumbprint | b9 c6 4a 1a d9 87 f1 cb 34 6c 92 50 20 1b 51 51 87 d5 a8 ee |

Everything shown is released every time I use the certificate – which is basically every time I go to a site that asks either for “any old certificate” or for a certificate from my certificate authority. (As far as I know, the information is offered up before verifying that the site isn't evil). You can see that there is a lot of information leakage. X.509 certificates were designed before the privacy implications (to both individuals and their institutions) were well understood.

Beyond leaking potentially unnecessary information (like my email address), each of the fields shown in yellow is a correlation key that links my identity in one transaction to that in another – either within a single site or across multiple sites. Put another way, each yellow field is a handle that can be used to correlate my profiles. It's nice to have so MANY potential handles available, isn't it? Choosing between serial number, subject DN, public key, key identifier, alternative name and thumbprint is pretty exhausting, but any of them will work when trying to build a super-dossier.

I call this a “maximal disclosure token” because the same information is released everywhere you go, whether it is required or not. Further, it includes not one, but a whole set of omnidirectional identifiers (see law 4).

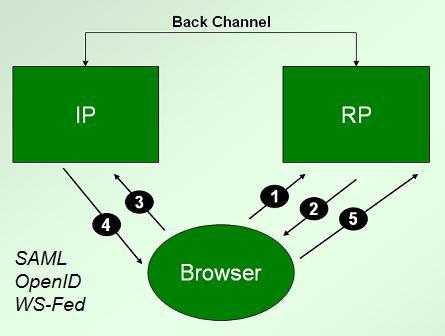

SAML tokens represent a step forward in this regard because, being constructed at the time of usage, they only need to contain information relevant to a given transaction. With protocols like the redirect protocol described here, the identity provider knows which relying party a user is visiting.

SAML tokens represent a step forward in this regard because, being constructed at the time of usage, they only need to contain information relevant to a given transaction. With protocols like the redirect protocol described here, the identity provider knows which relying party a user is visiting.

The Liberty Alliance has been forward-thinking enough to use this knowledge to avoid leaking omnidirectional handles to relying parties, through what it calls pseudonynms. For example, “persistent” and “transient” pseudonyms can be put in the tokens by the identity provider, rather than omnidirectional identifiers, and the subject key can be different for every invocation (or skipped altogether).

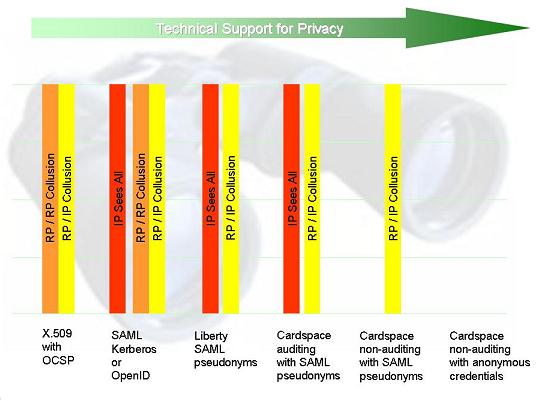

As a result, while the identity provider knows more about the sites visited by its users, and about the information of relevance to those sites, the ability of the sites to create cross-site profiles without the participation of the identity provider is greatly reduced. SAML does not employ maximal disclosure tokens. So in the threat diagram shown at the right I've removed the RP/RP collusion threat, which now pales in comparison to the other two.

As we will see, this does NOT mean the SAML protocol uses minimal disclosure tokens, and the many intricate issues involved are treated in a balanced way by Stefan Brands here. One very interesting argument he makes is that the relying party (he calls it “service provider or SP), actually suffers a decrease in control relative to the identity provider (IP) in these redirection protocols, while the IP gains power at the expense of the RP. For example, if Liberty pseudonyms are used, the IP will know all the customers employing a given RP, while the RP will have no direct relationship with them. I look forward to finding out, perhaps over a drink with someone who was present, how these technology proposals aligned with various business models as they were being elaborated.

To see how a SAML token compares with an X.509 certificate, consider this example:

You'll see there is an assertionID, which is different for every token that is minted. Typically it would not link a user across transactions, either within a given site or across multiple sites. There is also a “name identifier”. In this case it is a public identifier. In others it might be a pseudonym or “unidirectional identifier” recognized only by one site. It might even be a transient identifier that will only be used once.

Then there are the attributes – hopefully not all the possible attributes, but just the ones that are necessary for a given transaction to occur.

Putting all of this together, the result is an identity provider which has a great deal of visibility into and control over what is revealed where, but more protection against cross-site linking if it handles the release of attributes on a need-to-know basis.

What is good about X.509 is that if a relying party does not collude, the CA has no visibility onto the fact that a given user has visited it (we will see that in some other systems such visibility is unavoidable). But a relying party could at any point decide to collude with the CA (assuming the CA actually accepts such information, which may be a breach of policy). This might result in the transfer of information in either direction beyond that contained in the certificate itself.

What is good about X.509 is that if a relying party does not collude, the CA has no visibility onto the fact that a given user has visited it (we will see that in some other systems such visibility is unavoidable). But a relying party could at any point decide to collude with the CA (assuming the CA actually accepts such information, which may be a breach of policy). This might result in the transfer of information in either direction beyond that contained in the certificate itself.