I received a helpful and informed comment by Michael Hanson at Mozilla Labs on the Street View MAC Address issue:

I just wanted to chip in and say that the practice of wardriving to create a SSID/MAC geolocation database is hardly unique to Google.

The practice was invented by Skyhook Wireless], formerly Quarterscope. The iPhone, pre-GPS, integrated the technology to power the Maps application. There was some discussion of how this technology would work back in 2008, but it didn't really break out beyond the community of tech developers. I'm not sure what the connection between Google and Skyhook is today, but I do know that Android can use the Skyhook database.

Your employer recently signed a deal with Navizon, a company that employs crowdsourcing to construct a database of WiFi endpoints.

Anyway – I don't mean to necessarily weigh in on the question of the legality or ethics of this approach, as I'm not quite sure how I feel about it yet myself. The alternative to a decentralized anonymous geolocation system is one based on a) GPS, which requires the generosity of a space-going sovereign to maintain the satellites and has trouble in dense urban areas, or b) the cell towers, which are inefficient and are used to collect our phones’ locations. There's a recent paper by Constandache (et al) at Duke that addresses the question of whether it can be done with just inertial reckoning… but it's a tricky problem.

Thanks for the post.

The scale of the “wardriving” [can you beieve the name?] boggles my mind, and the fact that this has gone on for so long without attracting public attention is a little incredible. But in spite of the scale, I don't think the argument that it's OK to do something because other people have already done it will hold much water with regulators or the thinking public In fact it all sounds a bit like a teenager trying to avoid his detention because he was “just doing what Johnny did.”

As Michael say, one can argue that there are benefits to drive-by device identity theft. In fact, one can argue that there would be benefits to appropriating and reselling all kinds of private information and property. But in most cases we hold ourselves back, and find other, socially acceptable ways of achieving the same benefits. We should do the same here.

Are these databases decentralized and anonymous?

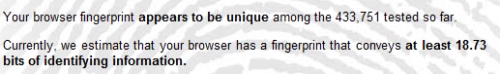

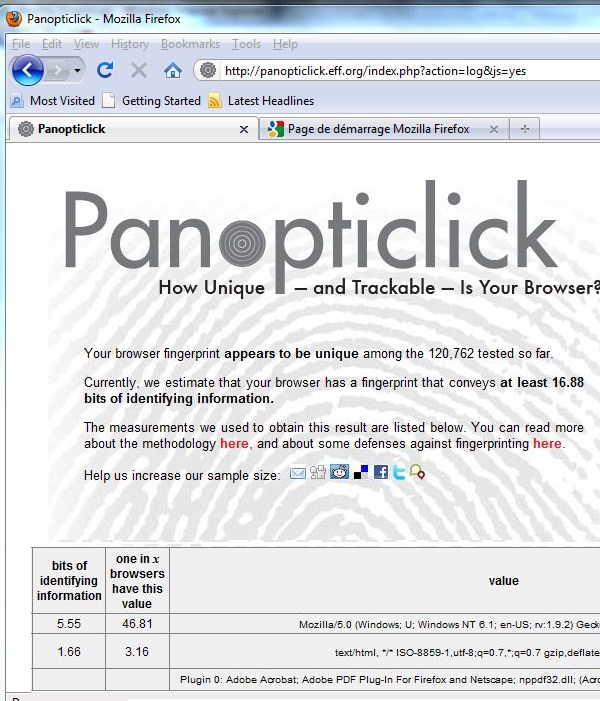

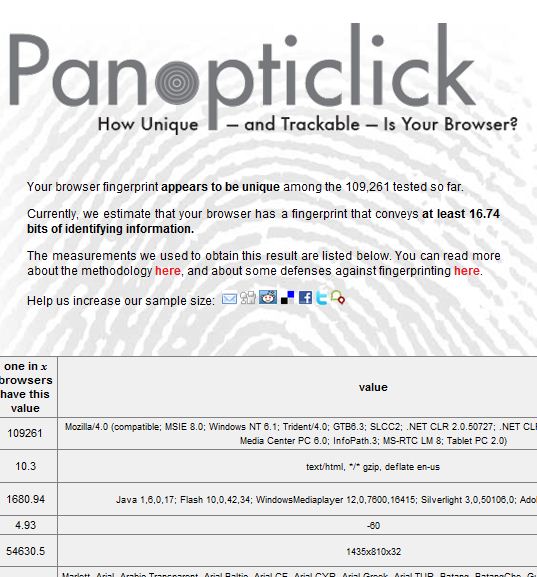

As hard as I try, I don't see how one can say the databases are decentralized and anonymous. For starters, they are highly centralized, allowing monetized lookup of any MAC address in the world. Secondly, they are not anonymous – the databases contain the identity information of our personal devices as well as their exact locations in molecular space. It is strange to me that personal information can just be “declared to be public” by those who will benefit from that in their businesses.

Do these databases protect our privacy in some way?

No – they erode it more than before. Why?

Location information has long been available to our telephone operators, since they use cell-tower triangulation. This conforms to the Law of Justifiable Parties – they need to know where we are (though not to remember it) to provide us with our phone service.

But now yet another party has insinuated itself into the mobile location equation: the MAC database operator – be it Google, Skyhook or Navizon.

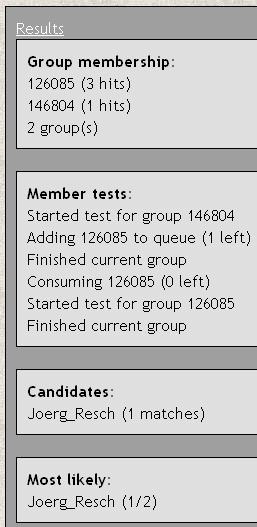

If you carry a cell phone that uses one of these databases – and maybe you already do – your phone queries the database for the locations of MAC addresses it detects. This means means that in additon to your phone company, a database company is constantly being informed about your exact location. From what Michael says it seems the cell phone vendor might additionally get in the middle of this location reporting – all parties who have no business being part of the location transaction unless you specifically opt to include them.

Exactly what MAC addresses does your phone collect and submit to the database for location analysis? Clearly, it might be all the MAC addresses detected in its vicinity, including those of other phones and devices… You would then be revealing not only your own location information, but that of your friends, colleagues, and even of complete strangers who happen to be passing by – even if they have their location features turned off!

Having broken into our home device-space to take our network identifiers without our consent, these database operators are thus able to turn themselves into intelligence services that know not only the locations of people who have opted into their system, but of people who have opted out. I predict that this situation will not be allowed to stand.

Are there any controls on this, on what WiFi sniffing outfits can do with their information, and on how they relate it to other information collected on us, on who they sell it to?

I don't know anything about Navizon or the way it uses crowdsourcing, but I am no happier with the idea that crowds are – probably without their knowledge – eavesdropping on my network to the benefit of some technology outfit. Do people know how they are being used to scavenge private network identifiers – and potentially even the device identifiers of their friends and colleagues?

Sadly, it seems we might now have a competitive environment in which all the cell phone makers will want to employ these databases. The question for me is one of whether, as these issues come to the attention of the general public and its representatives, a technology breaking two Laws of Identity will actually survive without major reworking. My prediction is that it will not.

Reaping private identifiers is a mistake that, uncorrected, will haunt us as we move into the age of the smart home and the smart grid. Sooner or later society will nix it as acceptable behavior. Technologists will save a lot of trouble if we make our mobile location systems conform with reasonable expectations of privacy and security starting now.

Alan Eustace, Google's Senior VP of Engineering & Research,

Alan Eustace, Google's Senior VP of Engineering & Research,