Randall Stross has a piece in the NYT that hits the jackpot in explaining to non-technical readers what's wrong with passwords and how Information Cards help:

“I once felt ashamed about failing to follow best practices for password selection — but no more. Computer security experts say that choosing hard-to-guess passwords ultimately brings little security protection. Passwords won’t keep us safe from identity theft, no matter how clever we are in choosing them.

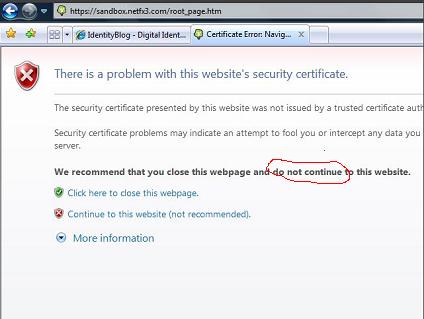

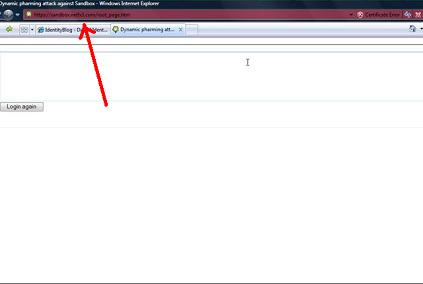

“That would be the case even if we had done a better job of listening to instructions. Surveys show that we’ve remained stubbornly fond of perennial favorites like “password,” “123456” and “LetMeIn.” The underlying problem, however, isn’t their simplicity. It’s the log-on procedure itself, in which we land on a Web page, which may or may not be what it says it is, and type in a string of characters to authenticate our identity (or have our password manager insert the expected string on our behalf).

“This procedure — which now seems perfectly natural because we’ve been trained to repeat it so much — is a bad idea, one that no security expert whom I reached would defend.”

“The solution urged by the experts is to abandon passwords — and to move to a fundamentally different model, one in which humans play little or no part in logging on. Instead, machines have a cryptographically encoded conversation to establish both parties’ authenticity, using digital keys that we, as users, have no need to see.

“In short, we need a log-on system that relies on cryptography, not mnemonics.

“As users, we would replace passwords with so-called information cards, icons on our screen that we select with a click to log on to a Web site. The click starts a handshake between machines that relies on hard-to-crack cryptographic code…”

Randall's piece also drills into OpenID. Summarizing, he sees it as a password-based system, and therefore a diversion from what's really important:

“OpenID offers, at best, a little convenience, and ignores the security vulnerability inherent in the process of typing a password into someone else’s Web site. Nevertheless, every few months another brand-name company announces that it has become the newest OpenID signatory. Representatives of Google, I.B.M., Microsoft and Yahoo are on OpenID’s guiding board of corporations. Last month, when MySpace announced that it would support the standard, the nonprofit foundation OpenID.net boasted that the number of “OpenID enabled users” had passed 500 million and that “it’s clear the momentum is only just starting to pick up.”

“Support for OpenID is conspicuously limited, however. Each of the big powers supposedly backing OpenID is glad to create an OpenID identity for visitors, which can be used at its site, but it isn’t willing to rely upon the OpenID credentials issued by others. You can’t use Microsoft-issued OpenID at Yahoo, nor Yahoo’s at Microsoft.

“Why not? Because the companies see the many ways that the password-based log-on process, handled elsewhere, could be compromised. They do not want to take on the liability for mischief originating at someone else’s site.

Randall is right that when people use passwords to authenticate to their OpenID provider, the system is vulnerable to many phishing attacks. But there's an important point to be made: these problems are caused by their use of passwords, not by their use of OpenID.

When people authenticate to OpenID in a reliable way – for example, by using Information Cards – the phishing attacks are no longer possible, as I explain in this video. At that point, it becomes a safe and convenient way to use a public personna.

The question of whether and when large sites will accept the OpenIDs issued by other large sites is a more complicated one. I discussed a number of the issues here. The problem is that for many applications, there needs to be a layer of governance on top of the identity basic technology. What happens when something goes wrong? Are there reliability and quality of service guarantees? If informaiton is leaked, who is responsible? How is fiscal liability established? And by the way, we need to figure this out in order to use any federation technology, whether OpenID, SAML or WS-Trust.

So far, these questions are being answered on an ad hoc basis, since there are no established frameworks. I think you can divide what's happening into two approaches, both of which make a lot of sense:

First, there are relying parties that limit the use of OpenID to low-value resources. A great example is the French telecom company Orange. It will accept ID's from any OpenID provider – but just for free services. The approach is simply to limit use of the credentials to so-called low-value resources. Blogger and others use this approach as well.

Second, the is the tack of using the protocol for higher-value purposes, but limiting the providers accepted to those with whom a governance agreement can be put in place. Microsoft's Health Vault, for example, currently accepts OpenIDs from two providers, and plans to extend this as it understands the governance issues better. I look at it as a very early example of a governance-oriented approach.

I strongly believe OpenID moves identity forward. The issues of password attacks don't go away – in fact the vulnerabilites are potentially worse to the extent that a single password becomes the gate to more resources. But technologies like Information Cards will solve these problems. There is a tremendous synergy here, and that is the heart of the matter. Randall writes:

“We won’t make much progress on information cards in the near future, however, because of wasted energy and attention devoted to a large distraction, the OpenID initiative. “

But I think this energy and attention will take us in the right direction as it shines the spotlight on the benefits and issues of identity, wagging identity's “long tail”.