Niraj Chokshi has published a piece in The Atlantic where he grapples admirably with the issues related to Google's collection and use of device fingerprints (technically called MAC Addresses). It is important and encouraging to have journalists like Niraj taking the time to explore these complex issues.

But I have to say that such an exploration is really hard right now.

Whether on purpose or by accident, the Google PR machine is still handing out contradictory messages. In particular, the description in Google's Refresher FAQ titled “How does this location database work?” is currently completely different from (read: the opposite of) what its public relations people are telling journalists like Nitaj. I think reestablishing credibility around location services requires the messages to be made consistent so they can be verified by data protection authorities.

Here are some excerpts from the piece – annotated with some comments by me. [Read the whole article here.]

The Wi-Fi data Google collected in over 30 countries could be more revealing than initially thought…

Google's CEO Eric Schmidt has said the information was hardly useful and that the company had done nothing with it. The search giant has also been ordered (or sought) to destroy the data. According to their own blog post, Google logged three things from wireless networks within range of their vans: snippets of unencrypted data; the names of available wireless networks; and a unique identifier associated with devices like wireless routers. Google blamed the collection on a rogue bit of code that was never removed after it had been inserted by an engineer during testing.

[The statement about rogue code is an example of the PR ambiguity Nitaj and other journalists must deal with. Google blogs don't actually blame the collection of unique identifiers on rogue code, although they seem crafted to leave people with that impression. Spokesmen only blame rogue code for the collection of unencrypted data content (e.g. email messages.) – Kim]

Each of the three types of data Google recorded has its uses, but it's that last one, the unique identifier, that could be valuable to a company of Google's scale. That ID is known as the media access control (MAC) address and it is included — unencrypted, by design — in any transfer, blogger Joe Mansfield explains.

Google says it only downloaded unencrypted data packets, which could contain information about the sites users visited. Those packets also include the MAC address of both the sending and receiving devices — the laptop and router, for example.

[Another contradiction: Google PR says it “only” collected unencrypted data packets, but Google's GStumbler report says its cars did collect and record the MAC addresses from encrypted data frames as well. – Kim]

A company as large as Google could develop profiles of individuals based on their mobile device MAC addresses, argues Mansfield:

Get enough data points over a couple of months or years and the database will certainly contain many repeat detections of mobile MAC addresses at many different locations, with a decent chance of being able to identify a home or work address to go with it.

Now, to be fair, we don't know whether Google actually scrubbed the packets it collected for MAC addresses and the company's statements indicate they did not. [Yet the GStumbler report says ALL MAC addresses were recorded – Kim]. The search giant even said it “cannot identify an individual from the location data Google collects via its Street View cars.” Add a step, however, and Google could deduce an individual from the location data, argues Avi Bar-Zeev, an employee of Microsoft, a Google competitor.

[Google] could (opposite of cannot) yield your identity if you've used Google's services or otherwise revealed it to them in association with your IP address (which would be the public IP of your router in most cases, visible to web servers during routine queries like HTTP GET). If Google remembered that connection (and why not, if they remember your search history?), they now have your likely home address and identity at the same time. Whether they actually do this or not is unclear to me, since they say they can't do A but surely they could do B if they wanted to.

Theoretically, Google could use the MAC address for a mobile device — an iPod, a laptop, etc. — to build profiles of an individual's activity. (It's unclear whether they did and Google has indicated that they have not.) But there's also value in the MAC addresses of wireless routers.

Once a router has been associated with a real-world location, it becomes useful as a reference point. The Boston company Skyhook Wireless, for example, has long maintained a database of MAC addresses, collected in a (slightly) less-intrusive way. Skyhook is the primary wireless positioning system used by Apple's iPhone and iPod Touch. (See a map of their U.S. coverage here.) When your iPod Touch wants to retrieve the current location, it shares the MAC addresses of nearby routers with Skyhook which pings its database to figure out where you are.

Google Latitude, which lets users share their current location, has at least 3 million active users and works in a similar way. When a user decides to share his location with any Google service on a non-GPS device, he sends all visible MAC addresses in the vicinity to the search giant, according to the company's own description of how its location services works.

[Update: Google's own “refresher FAQ” states that a user of its geo-location services, such as Latitude, sends all MAC addresses “currently visible to the device” to Google, but a spokesman said the service only collects the MAC addresses of routers. That FAQ statment is the basis of the following argument.]

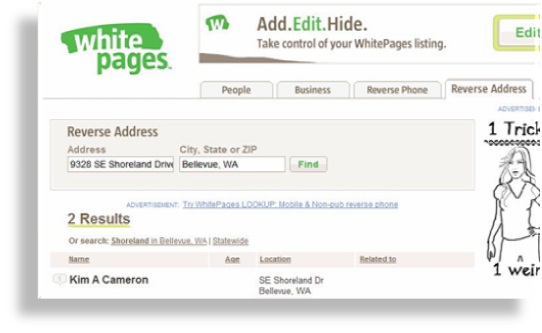

This is disturbing, argues blogger Kim Cameron (also a Microsoft employee), because it could mean the company is getting not only router addresses, but also the MAC addresses of devices such as laptops and iPods. If you are sitting next to a Google Latitude user who shares his location, Google could know the address and location of your device even though you didn't opt in. That could then be compared with all other logged instances of your MAC address to develop a profile of where the device is and has been.

Google denies using the information it collected and, if the company is telling the truth, then only data from unencrypted networks was intercepted anyway, so you have less to worry about if your home wireless network is password-protected. (It's still not totally clear whether only router MAC addresses were collected. Google said it collected the information for devices “like a WiFi router.”) Whether it did or did not collect or use this information isn't clear, but Google, like many of its competitors, has a strong incentive to get this kind of location data.

[Again, and I really do feel for Niraj, the PR leaves the impression that if you have passwords and encryption turned on you have nothing to worry about, but Googles’ GStumbler report says that passwords and encryption did not prevent the collection of the MAC addresses of phones and laptops from homes and businesses. – Kim]

I really tuned in to these contradictory messages when a reader first alerted me to Niraj's article. It looked like this:

My comments earned their strike-throughs when a Google spokesman assured the Atlantic “the Service only collects the MAC addresses of routers.” I pointed out that my statement was actually based on Google's own FAQ, and it was their FAQ (“How does this location database work?”) – rather than my comments – that deserved to be corrected. After verifying that this was true, Niraj agreed to remove the strikethrough.

How can anyone be expected to get this story right given the contradictions in what Google says it has done?

In light of this, I would like to see Google issue a revision to its “Refresher FAQ” that currently reads:

The “list of MAC addresses which are currently visible to the device” would include the addresses of nearby phones and laptops. Since Google PR has assured Niraj that “the service only collects the MAC addresses of routers”, the right thing to do would be to correct the FAQ so it reads:

- “The user’s device sends a request to the Google location server with the list of MAC addresses found in Beacon Frames announcing a Network Access Point SSID and excluding the addresses of end user devices like WiFi enabled phones and laptops.”

This would at least reassure us that Google has not delivered software with the ability to track non-subscribers and this could be verified by data protection authorities. We could then limit our concerns to what we need to do to ensure that no such software is ever deployed in the future.

Being a

Being a

“Today, I am pleased to announce the latest step in moving our Nation forward in securing our cyberspace with the release of the draft National Strategy for Trusted Identities in Cyberspace (NSTIC). This first draft of NSTIC was developed in collaboration with key government agencies, business leaders and privacy advocates. What has emerged is a blueprint to reduce cybersecurity vulnerabilities and improve online privacy protections through the use of trusted digital identities. “

“Today, I am pleased to announce the latest step in moving our Nation forward in securing our cyberspace with the release of the draft National Strategy for Trusted Identities in Cyberspace (NSTIC). This first draft of NSTIC was developed in collaboration with key government agencies, business leaders and privacy advocates. What has emerged is a blueprint to reduce cybersecurity vulnerabilities and improve online privacy protections through the use of trusted digital identities. “